From knowing to understanding Kubernetes Ingress

Source: cajieh.com

Introduction

In Kubernetes, an Ingress resource is an API object that manages external access to the services in a cluster. Typically, it provides HTTP and/or HTTPS routing, such as load balancing, SSL termination, and name-based virtual hosting, to services based on hostnames and paths. An Ingress expose HTTP and HTTPS traffic to the internet, and does not expose arbitrary ports or protocol like Services such as NodePort or LoadBalancer. However, unlike Services, for Ingress to work, it requires an Ingress controller. The Ingress controller is responsible for interpreting the information in the Ingress Resource and processing that data accordingly. Typically, an Ingress controller uses a load balancer to fulfill the configurations set in the Ingress Resource. Some popular examples of Ingress controllers include Nginx, Traefik, and HAProxy.

Pre-requisite:

- Basic knowledge of Container technology and kubernetes are required

- Proficient in the use of command line tools i.e. Bash terminal

- Access to a Kubernetes cluster with Ingress enabled if you want to experiment with the example in this tutorial

Different between kubernetes ingress and service

A kubernetes ingress and Service such as ClusterIP, NodePort or LoadBalancer are two different types of resources and each has their specific roles. To better understand the differences, I will focus on NodePort and Load balancer Services since both are the ones used for external communications similar to Ingress.

The Kubernetes NodePort and LoadBalancerr Services, and ingress are the ways of exposing application to the outside world from within the cluster but each of them does it differently. The following would explain how each of them expose application to the outside world:

- NoderPort: The NodePort is a kubernetes Service, a basic way to get external traffic into a service in the cluster. It opens a specific port on every Node, and any traffic that is sent to this port is forwarded to the service. However, NodePort has its limitations. The port numbers you can assign are restricted to a specific range (30000-32767), and the responsibility of managing the routing falls on cluster administrators.

- LoadBalancer. The LoadBalancer is essentially a NodePort with an additional, but unlike NodePort service, it uses an external load balancer to distribute incoming traffic across all nodes instead of a single node. This is more convenient than NodePort as the load balancer handles the routing. However, this is only available if the cluster is hosted on a cloud provider that supports it because Kubernetes does not directly offer it.. Typically LoadBalancer is more expensive and can still result in multiple entry points to the cluster, which is somewhat similar to NodePort, but managed by the cloud provider instead of the cluster administrator.

- Ingress: Unlike NodePort and LoadBalancer, Ingress is not a Kubernetes Service and doesn't expose a service. Instead, it's a collection of routing rules that govern how external users access services running in a Kubernetes cluster. It provides more flexibility and control over routing, including the ability to set up SSL, path-based routing, and host-based routing. However, an Ingress Controller, such as Nginx, Traefik, or HAProxy, is needed for the setup to be effective.

So, while NodePort and LoadBalancer are Kubernetes Service types that expose your services and create a direct route from the external network to your service, Ingress, on the other hand, is about routing and controlling traffic to services using path-based routing rules

How Ingress Works

Here's a step-by-step explanation of how it works:

-

An external user makes a request to the application running in Kubernetes cluster, typically through a URL.

-

This request reaches the Ingress Controller which is a pod configured and running in Kubernetes cluster and it is responsible for interpreting Ingress rules in the Ingress Resource.

-

The Ingress Controller checks the Ingress rules defined in an Ingress Resource to determine where to route the request based on factors like the requested host, or the path in the URL.

-

The Ingress Controller forwards the request to the appropriate Service based on the Ingress rules in an Ingress Resource.

-

The Service then routes the request to one of its Pods.

-

The Pod running the application, handles the request and respond accordingly.

So Ingress implementation in a Kubernetes cluster is achieved by setting up an Ingress Controller usually with a cloud provider and defining a set of rules in the Ingress resource that route requests to corresponding services.

A sample Kubernetes Ingress resource manifest with explanations:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: sample-ingress

namespace: sample-ns

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

ingressClassName: nginx-example

rules:

- host: mydomain.com

http:

paths:

- pathType: Prefix

path: "/myapp"

backend:

service:

name: my-service

port:

number: 80

Explanation of each of parts that makes up the Ingress resource defined above:

An Ingress resource requires apiVersion, kind, and metadata fields, like any other Kubernetes configurations. The metadata usually has an annotation field for attaching arbitrary non-identifying metadata to objects. In this case, it's used to define a rewrite rule for the Nginx Ingress controller. The spec object of an Ingress resource defines the desired state of the object necessary to establish a specific Ingress in the provided namespace. See the following for a description of each field of the spec in an Ingress resource:

ingressClassName: AningressClassNamespecifies the IngressClass that should be used to handle the Ingress resource. If theingressClassNameis omitted, a default Ingress class should be specified.rules: This lists the routes that should be exposed via the Ingress.host: This defines the host for the Ingress rulehttp: This defines the details of the HTTP(S) routes.paths: This lists the paths that should be exposed on the host.pathType: This defines the matching pattern. i.e.Prefix. The Prefix matches any request that starts with the specified path. See the Kubernetes docs on Ingress pathTypes such as ImplementationSpecific and Exact.path: This defines the path for the Ingress rule backend: This defines where the traffic should be routed to.service: This defines the service to which the traffic should be sent.name: This defines the name of the service to which the traffic should be sent.port: This defines the port on the service to which the traffic should be sent.number: 8080: This defines the port number on the service to which the traffic should be sent.

Example of Ingress Implementation exposing services externally

In this section, we’ll demonstrate how to expose services using Ingress on a Kubernetes in Docker (kind) cluster. The kind cluster setup must include the extraPortMapping config option for Ingress to work correctly. See the kind documentation on how to set up a cluster that forwards port traffic from the host to an ingress controller running on a node. See the Kind official documentation at

Setting Up An Ingress Controller

After setting up the cluster, follow the steps to install the Nginx Ingress Controller, and create a Namespace, Deployments, Services and an Ingress resource.

Step 1# - Deploy the NGINX Ingress Controller Install nginx ingress via CLI with NodePort:

To install Nginx ingress via CLI with NodePort, you can follow these steps: Install the Nginx Ingress Controller from the Kubernetes repository.

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/main/deploy/static/provider/kind/deploy.yaml

This will create a new namespace and install the Nginx Ingress Controller in that namespace. Check that the Nginx Ingress Controller has been installed correctly by using the following command:

kubectl get pods -n ingress-nginx -l app.kubernetes.io/name=ingress-nginx --watch

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-ffp5l 0/1 Completed 0 2m45s

ingress-nginx-admission-patch-cv76b 0/1 Completed 1 2m45s

ingress-nginx-controller-794cbf6fb9-fjgxw 1/1 Running 0 2m45s

Also, we can view all the resources in Nginx Ingress namespace created using the below command:

k get all -n ingress-nginx

NAME READY STATUS RESTARTS AGE

pod/ingress-nginx-admission-create-gzvqw 0/1 Completed 0 42m

pod/ingress-nginx-admission-patch-pjjqn 0/1 Completed 0 42m

pod/ingress-nginx-controller-78dcdd7454-mwgrk 1/1 Running 0 42m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/ingress-nginx-controller NodePort 10.96.92.148 <none> 80:31732/TCP,443:32255/TCP 42m

service/ingress-nginx-controller-admission ClusterIP 10.96.201.38 <none> 443/TCP 42m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/ingress-nginx-controller 1/1 1 1 42m

NAME DESIRED CURRENT READY AGE

replicaset.apps/ingress-nginx-controller-78dcdd7454 1 1 1 42m

NAME COMPLETIONS DURATION AGE

job.batch/ingress-nginx-admission-create 1/1 9s 42m

job.batch/ingress-nginx-admission-patch 1/1 9s 42m

Step 2# - Create a DNS Record in local machine for caroline.ingress.com

To create a DNS record on your local machine, edit the “/etc/hosts” file, and add a new line with the desired IP address and hostname. In this case, it should be the localhost IP address which is 127.0.0.1. Add the following in the “/etc/hosts” file: Open the file:

vim /etc/hosts

Add the entry:

127.0.0.1 caroline.ingress.com

Save the file and exit the editor. This change will resolve caroline.ingress.com to 127.0.0.1 on your local machine and host the domain caroline.ingress.com locally.

Note: If you get “Error: "/private/etc/hosts" is read-only (add ! to override)”, you need to use superuser privileges to override the read-only status. Open the "/etc/hosts" file using vim with superuser privileges:

sudo vim /etc/hosts

Step 3# Create a Namespce

k create ns carolina-ns

namespace/carolina-ns created

Step 4# Create the Deploments in carolina-ns

Create to deployments in the carolina-ns namespace with an nginx container and an init container that outputs "Good day from Cary, North Carolina" on port 80 and "Good day from Apex, North Carolina!" on port 80. The init container uses the busybox image to run a simple echo command to a file that is shared with the nginx container through a volume. The nginx container is then configured to serve this file. See the manifest below:

deployments.yaml file

apiVersion: apps/v1

kind: Deployment

metadata:

name: cary-deploy

namespace: carolina-ns

labels:

app: cary

spec:

replicas: 1

selector:

matchLabels:

app: cary

template:

metadata:

labels:

app: cary

spec:

volumes:

- name: workdir

emptyDir: {}

initContainers:

- name: init

image: busybox

command: ['sh', '-c', 'echo "Good day from Cary, North Carolina!" > /work-dir/index.html']

volumeMounts:

- name: workdir

mountPath: "/work-dir"

containers:

- name: nginx

image: nginx:1.21.5-alpine

volumeMounts:

- name: workdir

mountPath: "/usr/share/nginx/html"

ports:

- containerPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: apex-deploy

namespace: carolina-ns

labels:

app: apex

spec:

replicas: 1

selector:

matchLabels:

app: apex

template:

metadata:

labels:

app: apex

spec:

volumes:

- name: workdir

emptyDir: {}

initContainers:

- name: init

image: busybox

command: ['sh', '-c', 'echo "Good day from Apex, North Carolina!" > /work-dir/index.html']

volumeMounts:

- name: workdir

mountPath: "/work-dir"

containers:

- name: nginx

image: nginx:1.21.5-alpine

volumeMounts:

- name: workdir

mountPath: "/usr/share/nginx/html"

ports:

- containerPort: 80

---

k create -f deployments.yaml

deployment.apps/cary-deploy created

deployment.apps/apex-deploy created

Step 5# Expose the Deployment by creating a ClusterIP Service:

Services.file

apiVersion: v1

kind: Service

metadata:

name: cary-svc

namespace: carolina-ns

spec:

selector:

app: cary

ports:

- protocol: TCP

port: 80

targetPort: 80

type: ClusterIP

---

apiVersion: v1

kind: Service

metadata:

name: apex-svc

namespace: carolina-ns

spec:

selector:

app: apex

ports:

- protocol: TCP

port: 80

targetPort: 80

type: ClusterIP

Apply the services.yaml

k create -f svc.yaml

service/cary-svc created

service/apex-svc created

This would expose each deployment using ClusterIP services on port 80 respectively.

Step 6# Create an Ingress resource

ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: carolina-ingress

namespace: carolina-ns

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- host: carolina.ingress.com

http:

paths:

- path: /cary

pathType: Prefix

backend:

service:

name: cary-svc

port:

number: 80

- path: /apex

pathType: Prefix

backend:

service:

name: apex-svc

port:

number: 80

Apply the ingress.yaml

k create -f ingress.yaml

ingress.networking.k8s.io/carolina-ingress created

View the all the resources created

k get deploy,po,svc,ing -n carolina-ns

k get deploy,po,svc,ing -n carolina-ns

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/apex-deploy 1/1 1 1 86m

deployment.apps/cary-deploy 1/1 1 1 86m

NAME READY STATUS RESTARTS AGE

pod/apex-deploy-5499bb4ff-4m9cj 1/1 Running 0 86m

pod/cary-deploy-5cfb67d9f7-ktpfr 1/1 Running 0 86m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/apex-svc ClusterIP 10.96.149.204 <none> 80/TCP 2m23s

service/cary-svc ClusterIP 10.96.9.134 <none> 80/TCP 2m23s

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress.networking.k8s.io/cary-ingress <none> carolina.ingress.com localhost 80 86m

Note: If the Address column is not populated in the Ingress, wait for a minute or two and try again.

Verify the Ingress implementation by accessing the URLs using curl tool:

curl carolina.ingress.com/cary

Good day from Cary, North Carolina!

curl carolina.ingress.com/apex

Good day from Apex, North Carolina!

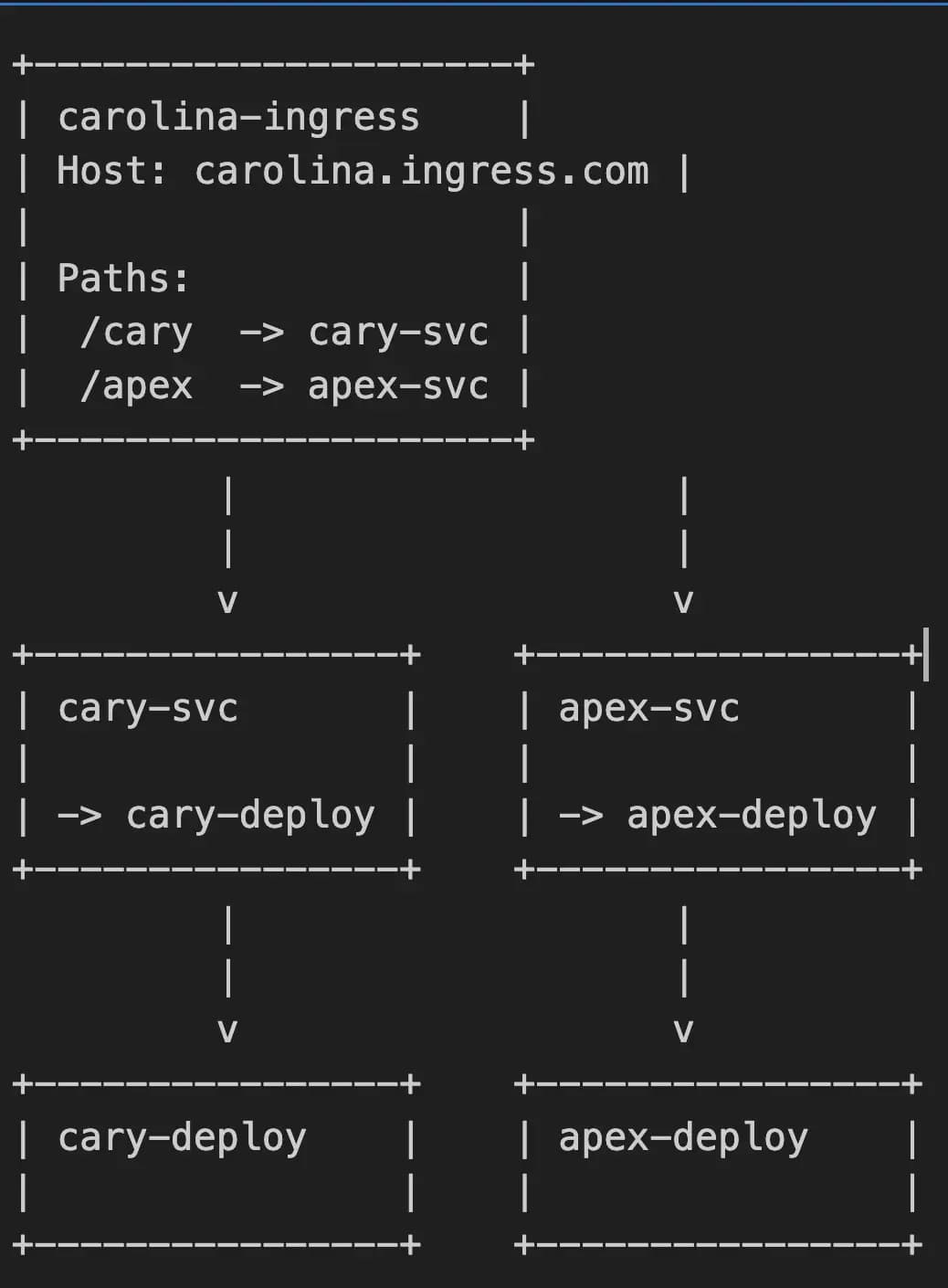

Awesome! It works! The carolina-ingress Ingress resource routes traffic based on the host carolina.ingress.com and paths /cary and /apex. The paths /cary and /apex route traffic to the cary-svc and apex-svc services, respectively. The services cary-svc and apex-svc expose the cary-deploy and apex-deploy deployments, respectively. This implementation demonstrate how the Ingress resource connects to services and deployments in a Kubernetes cluster.

Conclustion

We successfully implemented a Kubernetes Ingress, and accessed the cary-deploy and apex-deploy deployments, respectively exposed via the carolina-ingress Ingress resource. The Kubernetes Ingress resource allows our application in the deployments to be accessible externally by defining the routing rules for inbound traffic and using the local machine DNS name resolution to resolve the carolina.ingress.com domain. Remember, an Ingress Controller (such as Nginx or Traefik) must be running in your cluster for the Ingress resources to function properly. That’s it on how to implement Ingresss in Kubernetes.

I hope this helps! Go to the contact page and let me know if you have any further questions.