Fundamentals of Kubernetes NetworkPolicy Explained - Part 1

Source: www.cajieh.com

Pre-requisite:

- Basic knowledge of Container technology and kubernetes are required

- Proficient in the use of command line tools i.e. Bash terminal

- Access to a Kubernetes cluster that supports NetworkPolicy if you want to experiment with the example in this tutorial

Introduction

In this tutorial, I’ll be explaining the fundamentals Kubernetes NetworkPolicy with an example. In Kubernetes, NetworkPolicy function as a mechanism to regulate the flow of traffic between Pods inside a cluster. They are similar to firewall rules in traditional network infrastructure, where they dictate the communication rules between groups of pods and with other endpoints . By default, pods are non-isolated from each other, and they accept connection from any source. Once a NetworkPolicy is implemented, the pods become isolated and will only accept connections that are explicitly allowed by the NetworkPolicy, and reject any connections that are not allowed by the policy.

How NetworkPolicy works

The Network Policies are designed to focus on a set of Pods, and they defined the Ingress inbound and Egress outbound network points that these Pods can communicate with. Network Policies are additive, and don’t conflict each other. When more than one policies are applied to a particular pod in a specific direction, the allowed connections from that pod in that direction are the combination of what the relevant policies allow. Therefore, the order of evaluation does not influence the policy outcome. For a connection to be allowed from a source pod to a destination pod, both the egress policy on the source pod and the ingress policy on the destination pod must allow the connection. If either side disallows the connection, it will be denied.

There are four unique selector types that can be used to target endpoints:

podSelector: Select a particular pod and allow traffic to/from Communication with Pods that match a specific label in the same namespacenamespaceSelector: Select particular namespaces for which all pods within the namespace are granted permission to communicatepodSelectorandnamespaceSelector: Select a combination ofnamespaceSelectorandpodSelectorfor specific Pods within certain namespaces.ipBlock: Select an IP address within the specified block and allow the connection.

A Sample of NetworkPolicy object specification with explanation

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-network-policy

namespace: default

spec:

podSelector:

matchLabels:

app: frontend

policyTypes:

- Ingress

- Egress

ingress:

- from:

- podSelector:

matchLabels:

role:backend

- namespaceSelector:

matchLabels:

app: testproject

- ipBlock:

cidr: 172.17.0.0/16

except:

- 172.17.1.0/24

ports:

- protocol: TCP

port: 3306

egress:

- to:

- ipBlock:

cidr: 10.0.0.0/24

- podSelector:

matchLabels:

role:apiserver

- namespaceSelector:

matchLabels:

app: wp-backend

ports:

- protocol: TCP

port: 5978

A NetworkPolicy requires apiVersion, kind, and metadata fields like any other Kubernetes configurations. The spec object of a NetworkPolicy contains all the necessary information to establish a specific network policy in the provided namespace, or across namespaces.

podSelector: The podSelector determines the set of pods the policy applies to. The NetworkPolicy example above targets pods with the app=frontend label. A podSelectorwithout any specifications targets all pods in the namespace.

policyTypes: The policyTypes include Ingress, Egress, or both. This field specifies whether the policy applies to incoming traffic to the selected pods, outgoing traffic from the selected pods, or both. If no policyTypes are specified, Ingress is set by default, and Egress is set if there are any egress rules in the NetworkPolicy.

Ingress: A NetworkPolicy ingress rule allows traffic that matches both the from and ports sections. It denotes the inbound or incoming network traffic directed towards a pod or a collection of pods based on the originating pods (podSelector), namespaces (namespaceSelector), or IP addresses (ipBlock), The example policy includes a single rule, which allows traffic on one port from three different sources, specified by an ipBlock, a namespaceSelector, or a podSelector.

Egress: A NetworkPolicy has the ability to incorporate a list of approved egress rules. Each rule permits traffic that aligns with both the to and ports criteria. The example policy includes a single rule, which allows traffic on one port from three different sources, specified by an ipBlock, a namespaceSelector, and a podSelector.

The policy example provided includes a single rule, which authorizes traffic on a specific port to any destination within the 10.0.0.0/24 IP range, or labels that matches a namespaceSelector, or a podSelector.

An example of Kubernetes NetworkPolicy specifications and implementation

Let’s start by creating three pods in the default namespace namely frontend, backend and database using image=nginxdemos/hello:plain-text image for three pods and set the labels accordingly.

Usually, I like using alias command to shorten the kubectl command e.g.

alias k=kubectl

This would allow me to use the letter k instead of kubectl which makes typing easy and fast.

k run frontend --image=nginxdemos/hello:plain-text --port 80 -l="app=frontend"

pod/frontend created

k run backend --image=nginxdemos/hello:plain-text --port 80 -l="app=backend"

pod/backend created

k run database --image=nginxdemos/hello:plain-text --port 80 -l="app=database"

pod/database created

Verify that each pod can communicate with each other

Get the pods IP addresses:

k get po -o wide --show-labels

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES LABELS

backend 1/1 Running 0 79s 192.168.1.8 node01 <none> <none> app=backend

database 1/1 Running 0 56s 192.168.1.9 node01 <none> <none> app=database

frontend 1/1 Running 0 114s 192.168.1.7 node01 <none> <none> app=frontend

Verify frontend can access backend

k exec -it frontend -- curl 192.168.1.8

Server address: 192.168.1.8:80

Server name: backend

Date: 22/Jun/2024:18:35:15 +0000

URI: /

Request ID: 6bb1e29fbbb62615fd5a3d9839ffa57b

Verify backend can access frontend

k exec -it backend -- curl 192.168.1.7

Server address: 192.168.1.7:80

Server name: frontend

Date: 22/Jun/2024:18:34:22 +0000

URI: /

Request ID: 48c012f72525a4d90b2d6231a3215a44

Verify frontend can access database

k exec -it frontend -- curl 192.168.1.9

Server address: 192.168.1.9:80

Server name: database

Date: 22/Jun/2024:18:36:28 +0000

URI: /

Request ID: 83f0322e0148616af5d52615fd9ea069

No isolation, and restriction is not enforced, this is expected since there are no NetworkPolicy implemented.

Deny all traffics in default namespace NetworkPolicy specification

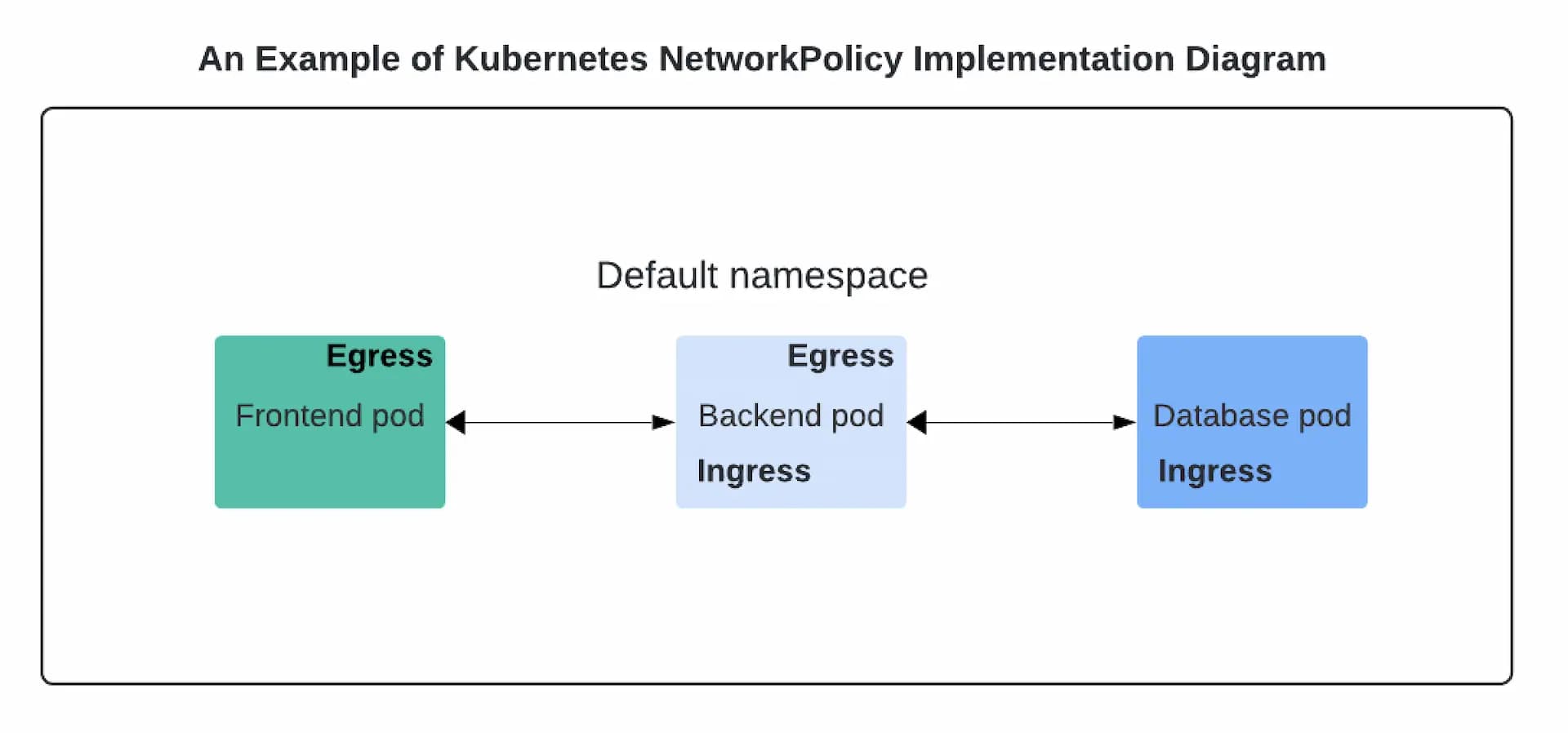

Let’s start by implementing NetworkPolicy to restrict access from each other. See the diagram above for a glance of the architectural setup of the pods and NetworkPolicy types. To begin with, it's crucial to block all traffic by default. This is important to ensure that if new pod resources are added they are isolated and restricted.

deny-all-traffic-default-ns.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: network-policy

namespace: default

spec:

podSelector: {}

policyTypes:

- Ingress

- Egress

Implementation

Then apply the NetworkPolicy specification using the deny-all-traffic-default-ns.yaml file

k create -f deny-all-traffic-default-ns.yaml

networkpolicy.networking.k8s.io/network-policy created

Verify the connection again

k exec -it frontend -- curl 192.168.1.8`

curl: (7) Failed to connect to 192.168.1.8 port 80 after 1012 ms: Couldn't connect to server

command terminated with exit code 7

k exec -it backend -- curl 192.168.1.7

curl: (7) Failed to connect to 192.168.1.7 port 80 after 1017 ms: Couldn't connect to server

command terminated with exit code 7

Cool! It works! The pod-to-pod communication has been denied as specified in the NetworkPolicy specification above. But wait, we have to allow certain connections in an ideal situation, right? Let’s create three NetworkPolicy rules for allowing the following:

- Egress: Allow frontend to send traffic to backend

- Ingress and Egress: Allow backend to receive traffic from frontend and send traffic to database

- Ingress: Allow database to receive traffic from backend

Frontend Egress NetworkPolicy specification

allow-frontend-traffic-to-backend.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: frontend-network-policy

spec:

podSelector:

matchLabels:

run: frontend

policyTypes:

- Egress

egress:

- to:

- podSelector:

matchLabels:

run: backend

ports:

- protocol: TCP

port: 80

Implementation

Then apply the NetworkPolicy specification using the allow-frontend-traffic-to-backend.yaml file

k create -f allow-frontend-traffic-to-backend.yaml

networkpolicy.networking.k8s.io/network-policy created

Backend Ingress NetworkPolicy specification

allow-backend-from-frontend-and-to-database.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: backend-np

spec:

podSelector:

matchLabels:

app: backend

policyTypes:

- Ingress

ingress:

- from:

- podSelector:

matchLabels:

app: frontend

ports:

- protocol: TCP

port: 80

Implementation

Then apply the NetworkPolicy specification using the allow-backend-from-frontend-and-to-database.yaml file

k create -f allow-backend-from-frontend-and-to-database.yaml.yaml

networkpolicy.networking.k8s.io/network-policy created

Verify that Frontend can access backend

k exec -it frontend -- curl 192.168.1.8

Server address: 192.168.1.8:80

Server name: backend

Date: 22/Jun/2024:18:50:09 +0000

URI: /

Request ID: 6de172a80c3dceb2120fd85caeb09240

Backend Egress NetworkPolicy specification

Let’s modify the allow-backend-from-frontend-and-to-database.yaml and add an Egress rule for database pod

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: backend-np

spec:

podSelector:

matchLabels:

run: backend

policyTypes:

- Ingress

- Egress

ingress:

- from:

- podSelector:

matchLabels:

run: frontend

ports:

- protocol: TCP

port: 80

egress: # Add the following egress properties to allow backend traffic to database

- to:

- podSelector:

matchLabels:

run: database

ports:

- protocol: TCP

port: 80

Implementation

Apply the change using apply command

k apply -f allow-backend-from-frontend-and-to-database.yaml

Note: The apply command is used instead of create since we are updating an existing resource in the cluster

Before creating the NetworkPolicy for database pod access for backend, let’s try if the database is accessible after applying the backend ingress rule.

k exec -it backend -- curl 192.168.1.9

curl: (7) Failed to connect to 192.168.1.9 port 80 after 1002 ms: Couldn't connect to server

command terminated with exit code 7

No, access is denied since there is no NetworkPolicy rule in database pod to accept incoming traffic from backend pod.

Database Egress NetworkPolicy specification

Finally, let's create the NetworkPolicy Ingress rule for database pod so that incoming traffic from backend pod is allowed.

allow-database-to-receive-traffic-from-backend.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: database-np

spec:

podSelector:

matchLabels:

app: database

policyTypes:

- Ingress

ingress:

- from:

- podSelector:

matchLabels:

app: backend

ports:

- protocol: TCP

port: 80

Create the database NetworkPolicy

k create -f allow-database-to-receive-traffic-from-backend.yaml

networkpolicy.networking.k8s.io/database-np created

Verify the connection

k exec -it backend -- curl 192.168.1.9

Server address: 192.168.1.9:80

Server name: database

Date: 22/Jun/2024:19:07:28 +0000

URI: /

Request ID: 64e68c2bb7732e25797113451a536b7a

Check if newly added pods can access any of existing pods

Verify if an newly created pod can access existing pod like frontend or backend

k run pod-test --image=nginx

Get the pods IP Address again using k get po pod-test -o wide

k exec -it pod-test -- curl 192.168.1.7

curl: (7) Failed to connect to 192.168.1.10 port 80 after 1022 ms: Couldn't connect to server

command terminated with exit code 7

Try to access backend pod on IP Address 10.42.0.10

k exec -it pod-test -- curl 192.168.1.8

curl: (7) Failed to connect to 192.168.1.8 port 80 after 1001 ms: Couldn't connect to server

command terminated with exit code 7

Nice, the frontend, backend and database pods have restricted accesses based on the NetworkPolicy specifications and implementation, and are not accessible from newly added pods.

Conclusion:

That’s it!! By implementing these NetworkPolicy specifications, we've successfully blocked all traffic in the default namespace. Also enabled the frontend Pod to send traffic to the backend, allowed the backend to receive traffic from the frontend and send traffic to the database, and allowed the database to receive traffic from the backend.

Part 2 of this tutorial covered how to control access to namespaces using the NetworkPolicy resource. You can check it out here:Fundamentals of Kubernetes Network Policy Simplified- Part 2

If you liked this tutorial, let us know through the contact form.

Happy learning!